The last time we wrote about ChatGPT, it had just entered our lives. At that point, we had only started to scratch the surface of the tool’s abilities but could only speculate on the dangers and possible malicious use cases. In the meantime, more tools like AI-powered conversational agents have been created, thus providing more opportunities for the organization. While these chatbots offer convenience and human-like interactions, we now know that they also introduce new cyber threats that users and CISOs must be aware of.

In this article, we will explore ChatGPT cyber risks and provide insights into how individuals and organizations can protect themselves against potential threats.

Phishing Attacks

ChatGPT’s ability to engage in human-like conversations can make it a potential tool for cybercriminals to carry out phishing attacks. By imitating trusted entities or individuals, malicious actors can trick users into revealing sensitive information such as passwords, financial details, or personal data. Users must exercise caution when interacting with chatbots and remain vigilant about verifying the authenticity of the information they provide.

Social Engineering Exploitation

ChatGPT’s natural language processing capabilities enable it to convincingly simulate human responses, making it a prime target for social engineering exploitation. Cybercriminals can exploit the trust users place in AI chatbots to manipulate them into disclosing confidential information or engaging in harmful actions.

Also, in the last three months, new “ChatGPT-like” tools have appeared with few guarantees of their authenticity and origins. In some cases, hackers even pay to be a Google-sponsored ad to appear more credible. A few websites and browser extensions claiming to be chatbot tools have appeared to harvest all sorts of user data.

ChatGPT in its original form is an internet-based interface that does not require tools to be downloaded to a user’s endpoint. AI chatbots like ChatGPT can be manipulated by cybercriminals to impersonate individuals or trusted entities. This can lead to identity theft, fraud, or manipulation of users’ beliefs and preferences. It is important to exercise caution and verify the authenticity of chatbot interactions, especially when sensitive or personal information is involved. Users should refrain from sharing personal or confidential details with AI chatbots or anything they download to their endpoint to conduct interaction with the tool, especially when the requests seem suspicious or unusual.

The following are just a few examples of websites or extensions that impersonate as ChatGPT:

• chat-gpt-pc.online

• chat-gpt-online-pc.com

• chatgpt4beta.com

• chat-gpt-ai-pc.info

• chat-gpt-for-windows.com

• ChatGPT for Google

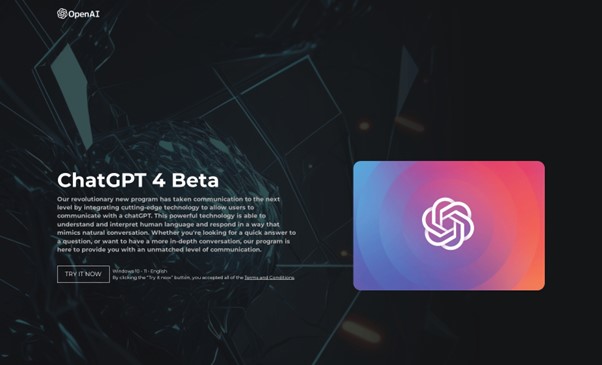

Figure 1: The original ChatGPT page

Figure 2: A malicious ChatGPT impersonation site

ChatGPT Malware Distribution

AI chatbots, including ChatGPT, can be leveraged as a medium for creating and distributing malware. Cybercriminals may embed malicious links or attachments within the conversation to trick users into downloading and executing harmful software. Quite a few cases have already shown that ChatGPT can be tricked into malicious code writing and while this gap in the tool’s security has been patched, it’s only a matter of time until the next one will be found.

It is crucial to exercise caution and refrain from clicking on suspicious links or downloading files from untrusted sources in day-to-day work, including during chatbot interactions.

ChatGPT Data Privacy and Security

The vast amount of personal information shared during conversations with AI chatbots poses significant data privacy and security risks. ChatGPT relies on absorbing, storing, and processing user data when it is inputted, thus enriching the collective knowledge of the tool and potentially making it a target for data breaches or unauthorized access.

Users should ensure that the chatbot service they are interacting with adheres to robust data protection practices, including encryption, secure storage, and strict access controls. For example, in April, Samsung employees in the semiconductor division accidentally shared confidential information while using ChatGPT for help at work. This resulted in a data leak estimated in gigabytes. Consequently, nation states might now be able to target ChatGPT as a tool and OpenAI as a company.

While AI-powered conversational agents like ChatGPT offer exciting possibilities, it is crucial to be aware of the cyber risks they present and to respond accordingly. The risk of falling victim to phishing attacks, social engineering exploitation, malware distribution, data privacy breaches, impersonation, and manipulation could be dire for an organization.

Users—and primarily CISOs—must adopt a proactive approach to protect themselves and enforce company policies for this subject. They can accomplish this by implementing best practices, such as being cautious about sharing sensitive information, verifying the authenticity of chatbot interactions, and maintaining robust cybersecurity measures. Additionally, organizations developing integration with AI chatbots like ChatGPT must prioritize data security, implement strong authentication mechanisms, and educate users about potential risks.

By staying informed and taking necessary precautions, especially by setting forth policies and preemptive measures, we can enjoy the benefits of AI while minimizing ChatGPT cyber risks and similar conversational agents.